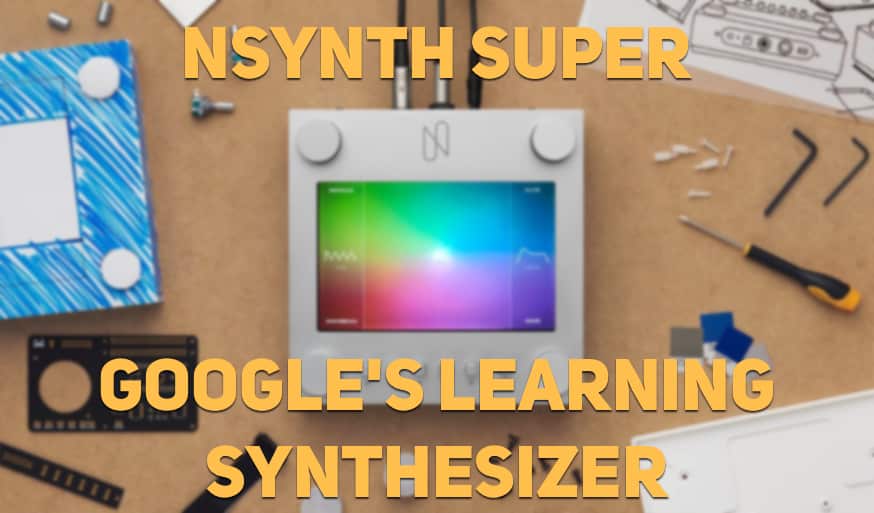

NSynth Super – Google’s Learning Synthesizer

Google recently announced the NSynth (Neural Synthesizer) Super, the product of an ongoing research experiment into machine learning technology and art. Their interest began with project Magenta – an exploration into machine learning algorithms and the potential for helping artists create art and music in new ways. NSynth uses algorithms that go beyond combining sounds, it uses sounds from other synthesizers to create completely new and unique synthesized sounds. Its algorithms input the sonic and acoustic characteristics of multiple sounds and then use that data to synthesize a new sound with similar characteristics. Conceptually, it’s like how convolution reverb can process the acoustic properties of a room based on a reverb or other sound and then create a new reverb based on those properties.

[su_youtube_advanced url=”https://www.youtube.com/watch?v=iTXU9Z0NYoU” showinfo=”no” rel=”no” modestbranding=”yes” https=”yes”] [/su_youtube_advanced]

The NSynth Super is a hardware device that uses the algorithm internally and can be controlled via MIDI. The device can record external sounds and then input them into the algorithm to be combined with other sounds. On the device are five knobs – P(osition), A(ttack), D(ecay), S(ustain), R(elease). The Position knob is to change the start of the sample phase, the ADSR knobs are for shaping the sounds further. Check out this video to see the prototype they whipped up for some lucky musicians to play with.

[su_youtube_advanced url=”https://www.youtube.com/watch?v=0fjopD87pyw” showinfo=”no” rel=”no” modestbranding=”yes” https=”yes”] [/su_youtube_advanced]

“As part of this exploration, they’ve created NSynth Super in collaboration with Google Creative Lab. It’s an open source experimental instrument which gives musicians the ability to make music using completely new sounds generated by the NSynth algorithm from 4 different source sounds. The experience prototype (pictured above) was shared with a small community of musicians to better understand how they might use it in their creative process.”

For those interested in playing around with the technology at hand, Google actually built us a MaxforLive Device that uses the same algorithms as the hardware. Instead of being able to input sound, however, there are prerecorded samples that come with the download folder that can be input into the device. Download it here!

0 responses on "NSynth Super - Google's Learning Synthesizer"